Transportation and logistics businesses have seen an upsurge in trade over the last few years, with increasing ecommerce and online sales driving demand. This welcome growth has, however, been accompanied with an ever-more demanding consumer looking for certainty and reliability in their service delivery alongside a more competitive landscape, forcing prices down with tightening margins.

According to a recent study from BCG, the COVID-19 pandemic contributed to an even greater boost to global ecommerce volumes, with US ecommerce sales alone increasing by 58% year on year. However, major parcel and postal operators are suffering from decreasing profitability and an overall worsening financial situation. Today’s supply chains are more dynamic and fragile than in the past and different tools are needed to sustain the scalability and profitability of these logistics businesses.

Our Solution

We have been working together with AWS to deliver machine learning to a range of companies in the transportation and logistics industry, helping them to cope with these new challenges and imperatives.

Together, we are driving transformative change for our customers in the transportation and logistics space by impacting some of the most critical areas of their end-to-end operations including:

- Predicting accurate delivery times and feeding back delivery statuses quickly and effectively.

- Optimizing transport and delivery costs, including carbon footprint, and reducing “attempted” deliveries.

- Forecasting demand and resource management to make sure that the delivery network is operating at optimal capacity.

- Enrichment of the consumer user experience and product offering with better accuracy and communication.

- Responding quickly to unplanned changes and challenges, like COVID-19.

In this blog, we want to look at ML in action; how it is impacting the transport and logistics businesses of today in the real world.

This culture of innovation led Mohammad Sleeq, Aramex CDO, to set out a core new strategy called the “Digital Experience” in 2018, as shown in figure 1. At the heart of the strategy is the Digital Core. The Digital Core uses big data and advanced analytics including machine learning to revolutionize the “last mile” for customers. The last mile in logistics refers to this final element of the delivery process – often the phase most subject to change and hardest to predict, but is the most important for the customer. An example of where Aramex has delivered massive improvement for its customers is in predicting the transit time using machine learning, providing far greater accuracy in the last mile.

Creating the Transit Time ML Model

In order to better and more accurately predict the transit time, a single or multi-leg approach can be used. The multi-leg approach generally operates better as different features and how they are in affecting the legs differently, can be assessed. Examples of the legs for international shipments are:

- Pick up to origin: The collection of the parcel and its arrival at processing hub.

- The flight time from origin to destination: The parcel leaving the processing hub, being put on a flight, the flight duration, and the parcel being unloaded at the other end. Here, the most important thing is the distance of the flight.

- Customs time: The amount of time it takes to clear customs is very dependent on the value of the shipment, any tax due, and day of the week – as the number of shipments that can be handled and the hours of operation of the customs staff differs by day of week.

- Last mile destination: The parcel leaving customs, arriving at processing hub for fulfillment, being loaded on the van, and then being handed over to the final customer at the other end.

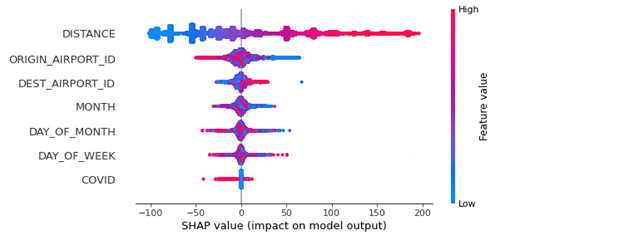

For simplicity, we will look at the flight time from origin to destination data (please note, this is not our data – that’s safely locked away). It clearly shows some of the standard features you should consider for your model and how important they are:

To be able to predict our target value, a linear regressor is best suited and you may need to do additional feature engineering, depending on your dataset and algorithm. The transit time model uses the Amazon SageMaker XGBoost algorithm and for the real dataset is used over 12 features (both numerical and categorical). Training was 6.3 million rows of data and took eight hours on a single ml.m4.4xlarge instance.

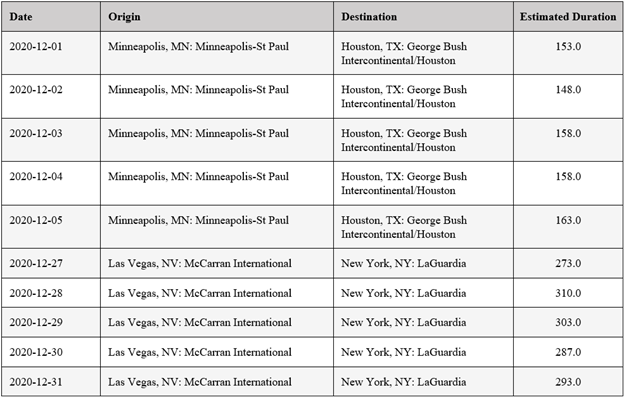

Once trained, test predictions can then be performed and the following tables shows some example predictions for the simplistic example:

Architecture

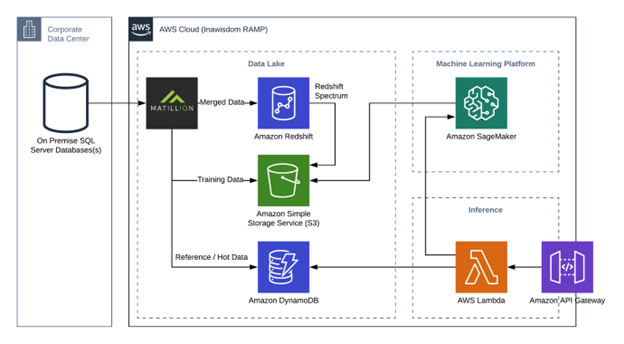

The architecture of the Digital Core and transit time use case is based around providing a data lake/lake house. This includes ingesting the data from a number of sources from around the Aramex business and allowing the data to centralized. Then, advanced analytics and machine learning are used to exploit that repository of information and the wealth of data contained within it. This was achieved using a serverless approach and with the following architecture:

Figure 3 Architecture

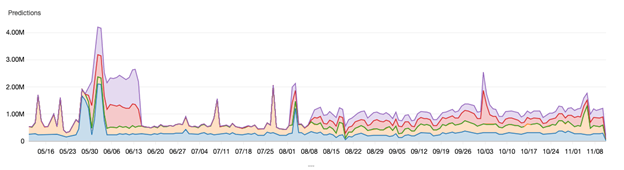

The architecture (as shown in Figure 3) has two key elements. First, an Amazon Redshift cluster that has 24 nodes and stores 3.5TB of data (the last 3 months) as hot data and using Redshift Spectrum to query over 7.5 years of data in held in Amazon S3. The other key element is Amazon SageMaker. Amazon SageMaker was used for multiple aspects: discovery using Notebooks, performing the training of the models (over 600 hours during the last year across all Aramex use cases), and real-time inference of up 4 million predictions per day during peak periods like Eid and Black Friday (as shown in Figure 4).

Figure 4 Predictions Per Day

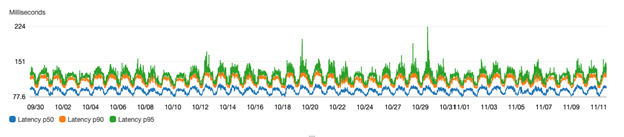

These elements are embedded and integrated using serverless technologies such as Amazon API Gateway and AWS Lambda. The results reach a target response time of below 200 milliseconds. Figure 5 shows the response times for p50, p90, and p95 percentiles in milliseconds.

Figure 5 – response times

Business Results

The transit time ML model has helped to increase accuracy of delivery predictions by 74% and reduced call center volumes by 40%, as customers are given a more realistic delivery timeframe, and therefore aren’t calling in to check where their deliveries are. The transit time use case, architecture, and ingestion process were delivered in just eight weeks in 2019 and the architecture has now expanded over the past two years to cover more than 15 other uses cases that leverage machine learning.

Several transportation and logistics companies like Aramex are choosing AWS machine learning technology due to the depth and breadth of AWS machine learning services, which are able to solve for many use cases and needs across the entire supply chain. AWS delivers three layers of ML technology. At the top layer of the stack, AWS has AI services that customers can incorporate into their existing applications and business flows without having to build and train algorithms. Specifically for transportation and logistics, AWS provides capabilities like time series forecasting for predicting shipments volumes, fraud detection for making financial transactions more secure, natural language recognition for extracting actionable information from legal and commercial documents. At the middle is Amazon SageMaker, which provides every developer and data scientist with the ability to build, train, and deploy machine learning models at scale. It removes the complexity from each step of the machine learning workflow so customers can more easily deploy machine learning use cases, anything from predictive maintenance to computer vision applied to the optimization of freight consolidation to predicting customer behaviors. The bottom layer is for expert machine learning practitioners–including advanced developers and data scientists. Companies using this layer are comfortable with building, tuning, training, deploying and managing models themselves, and working at the framework level, where AWS provides the most cost-effective range of compute resources for ML workloads available on the cloud.